While I am refactoring huge amounts of code out of EJB2 (and trying not to break too much things), I have noticed a bad design pattern that is so noxious I thought I should blog about it.

I call this the Phony Model Objects (PMO) antipattern.

It basically amounts to sharing as first class model objects entities that should never be publicly exposed. These bad citizens may look inocuous at first glance. In fact, they oftentimes carry a deep functional dependency on the technical framework they depend from or are subject to change at a different pace than actual domain objects would do it.

Interestingly I have found that all these PMOs are generated: whether they are EJB2 artifacts (home interfaces, entity beans, value objects, primary keys...) or web service client stubs. This re-enforces the idea that they are by-products and should be treated as such.

So, if you return or accept this kind of PMOs in public methods, do not wait and immediately refactor your code to share only proper domain model objects and keep the phony ones hidden behind the surface of your API.

This will eventually save you a lot of pain and efforts when the technical framework to which your PMOs happen to be coupled to will disappear and you will have to move away from them. Trust me on that one!

Wednesday, December 31, 2008

Tuesday, December 23, 2008

MuleCast: A Conversation with the creator of the JCR Transport

Ross Mason has interviewed me (again) about the JCR transport for Mule. I also had a chance to give a few words about the brand new Common Retry Policies module.

Enjoy!

Enjoy!

Saturday, December 20, 2008

A month of e-reading

After months of procrastination, I have decided to give a try to e-ink and go paperless as much as I can. I have been using a Sony PRS-700 for a month now and I must say I am really happy with it.

I opted for the Sony reader because of its openness: you plug it in an USB port and voila! You are free to drag and drop files into the reader.

I used it in all sorts of lighting and places: in the dim light and shaky environment of public transportation to the comfort of a sun lit office. So far I successfully used it for:

I opted for the Sony reader because of its openness: you plug it in an USB port and voila! You are free to drag and drop files into the reader.

I used it in all sorts of lighting and places: in the dim light and shaky environment of public transportation to the comfort of a sun lit office. So far I successfully used it for:

- Paperless meetings: instead of printing meetings' material, I now upload them to my reader.

- Long blog entries: I simply can not read long articles on a computer screen so I use the reader for long blog posts.

- Reference guides: the search feature of the reader is convenient to find passages of reference guides or technical books (like Mule in Action).

- Book reviews: instead of printing the books I am reviewing, I now upload them to the reader. The annotation feature is a little too slow to take long notes on pages but fast enough to attach a few words that are reminders for building the final review.

- Full fledged e-book of course! So far, I have only read a few technical e-books from InfoQ. Hundreds of pages later, I can tell it is really a great reading experience. I sometimes have to zoom on a schema, but most of the time using the landscape orientation guarantees a seamless reading.

Monday, December 15, 2008

How To Guarantee That Your Software Will Suck

You may have recently read two eponymic blog posts about this crucial subject, so I won't give you ten more ways to suck at programming but just one.

Here it is:

If you never ever have a critique view of your code, you are on your way to write software that sucks.

People who pair program may chuckle: they already have a continuous critique process that helps producing high quality code. People who practice code reviews may chuckle too: they already have a process (and, often, tools) for ensuring code is properly discussed.

So what is left for those who do not pair program or practice code review? The same applies: whenever you come back to your own code, critique it. Oftentimes, while adding a new feature, you will realize that a particular design is convoluted, a class is ill named or a method is hard to read.

Do not fall into complacency with your own code: love it, hate it, critique it, refactor it!

Here it is:

Don't critique your code.

If you never ever have a critique view of your code, you are on your way to write software that sucks.

People who pair program may chuckle: they already have a continuous critique process that helps producing high quality code. People who practice code reviews may chuckle too: they already have a process (and, often, tools) for ensuring code is properly discussed.

So what is left for those who do not pair program or practice code review? The same applies: whenever you come back to your own code, critique it. Oftentimes, while adding a new feature, you will realize that a particular design is convoluted, a class is ill named or a method is hard to read.

Do not fall into complacency with your own code: love it, hate it, critique it, refactor it!

Labels:

Craftsmanship

Saturday, December 13, 2008

Software That Kills

In the latest installment of his monthly IEEE Software column, Philippe Kruchten asks about the relevance of licensing software engineers. In this article, he states:

He then details the reasons why it makes sense to license people who write or certify “software-that-kills”.

Even if I am not licensed (and may never be), I fully agree with his positions. In fact, I feel compelled to ask why limiting licensing only to software that kills people. What about software that kills businesses? What about software that kills privacy?

Except when human lives are in jeopardy, software developers are basically free to do whatever they want when they build an application. Businesses and end users are left to goodwill and luck when it comes to receiving from software developers the professional standards they can legitimately expect from them.

Should licensed software engineers be engaged to at least review the design and architecture of software that can kill businesses or privacy? Would it be enough to restore trust in a profession from which the common wisdom is to expect so few?

The only purpose of licensing software engineers is to protect the public.

He then details the reasons why it makes sense to license people who write or certify “software-that-kills”.

Even if I am not licensed (and may never be), I fully agree with his positions. In fact, I feel compelled to ask why limiting licensing only to software that kills people. What about software that kills businesses? What about software that kills privacy?

Except when human lives are in jeopardy, software developers are basically free to do whatever they want when they build an application. Businesses and end users are left to goodwill and luck when it comes to receiving from software developers the professional standards they can legitimately expect from them.

Should licensed software engineers be engaged to at least review the design and architecture of software that can kill businesses or privacy? Would it be enough to restore trust in a profession from which the common wisdom is to expect so few?

Labels:

Craftsmanship

A new module for Mule!

I am happy to announce the very first release of the Common Retry Policies module for Mule 2.

The goal of this project is to encourage the Mule community to nurture a set of production grade retry policies. At this point, the available policies have been tested only with a limited set of transports (JCR with JBossMQ and ActiveMQ).

I expect the feedback of the community under several forms:

The goal of this project is to encourage the Mule community to nurture a set of production grade retry policies. At this point, the available policies have been tested only with a limited set of transports (JCR with JBossMQ and ActiveMQ).

I expect the feedback of the community under several forms:

- Configuration samples that worked for you,

- Bug reports, fixes and improvement patches,

- Features requests.

Labels:

Mule

Thursday, December 04, 2008

Hope in hardship?

Hard times provide opportunity for change. Can't afford for a project to fail? Applying agile development principles can help. Doing projects with fewer people? Applying agile development principles can help. Limited resources throw options in sharp relief where a surfeit of resources makes putting off change easier.Kent Beck, posted in Agile Interest Group Luxembourg

Right now every organisation is assessing all their costs (most companies have cut between 10-30% of their workforce). In this process, CIO and Managers are being asked to do more with less, to keep the engine running with half the budget. This means they have to look to open source.Ross Mason, from his blog.

These sound like there is a future for agile minded open source addicted developers out there. Time will tell...

Labels:

Craftsmanship,

World

Wednesday, November 26, 2008

Last Night Eclipse Demo Camp

I attended my first Eclipse Demo Camp last night in Vancouver and it was really a great event! Of course, the profusion of food and beer offered by the Eclipse Foundation at the end of the evening surely helped building such a great impression...

The whole evening was driven by the Tasktop guys, which gave us a chance to get a feel of the interesting ecosystem that is growing around Mylyn. I would like to turn the spotlight on two projects which are worth discovering on your own:

As a closing note, if you happen to be in Luxembourg tomorrow evening be sure to hit the Eclipse Demo Camp over there. You will have a chance to see Pierre-Antoine and Fabrice live, which is really worth the trip!

The whole evening was driven by the Tasktop guys, which gave us a chance to get a feel of the interesting ecosystem that is growing around Mylyn. I would like to turn the spotlight on two projects which are worth discovering on your own:

- Tripoli, an EclEmma-powered code coverage diff tool that allows you to pinpoint issues in an efficient manner.

- TOD, a so-called omniscient debugger that allows you to do back-in-time debugging without a DeLorean (which made me think a lot about what ReplaySolutions is building).

As a closing note, if you happen to be in Luxembourg tomorrow evening be sure to hit the Eclipse Demo Camp over there. You will have a chance to see Pierre-Antoine and Fabrice live, which is really worth the trip!

Labels:

Conferences,

Tools

Monday, November 24, 2008

Mulecast: “Mule in Action” book interview

Ross Mason has interviewed John D'Emic and I about Mule in Action.

If you listen to the podcast, you will get a 27% discount voucher as a compensation for ear damages caused by my broken English.

Isn't life a bliss?

If you listen to the podcast, you will get a 27% discount voucher as a compensation for ear damages caused by my broken English.

Isn't life a bliss?

Monday, November 17, 2008

Seven Years To Rot

So here we are, seven years after the Manifesto, and the webersphere is pontificating about the decline of agile, to the point that Uncle Bob has hard time containing his anger in front of such a display of nonsense and dishonesty.

What is going on with agile? After seven years of existence (I know, the techniques and approaches agile promotes predate the Manifesto), has it started to rot?

Some suggest that agile is following the Gartner hype-cycle and has now past its "peak of inflated expectations":

Of course, there is hype around agile and the diatribe of some of its fanatics can be a little over the top. But I think that the current "decline" in agile is not due to some disillusionment.

I think this so-called "decline" comes from the numerous software sweat shops and less-than average programmers who started to pretend they do "agile" without changing anything in their craftsmanship. Instead of a disillusionment, agile suffers from dilution.

Hopefully, people will be able to sort the wheat from the chaff and debunk these agile fraudsters. When this will happen, agile will start climbing its "slope of enlightenment".

What is going on with agile? After seven years of existence (I know, the techniques and approaches agile promotes predate the Manifesto), has it started to rot?

Some suggest that agile is following the Gartner hype-cycle and has now past its "peak of inflated expectations":

Of course, there is hype around agile and the diatribe of some of its fanatics can be a little over the top. But I think that the current "decline" in agile is not due to some disillusionment.

I think this so-called "decline" comes from the numerous software sweat shops and less-than average programmers who started to pretend they do "agile" without changing anything in their craftsmanship. Instead of a disillusionment, agile suffers from dilution.

Hopefully, people will be able to sort the wheat from the chaff and debunk these agile fraudsters. When this will happen, agile will start climbing its "slope of enlightenment".

Labels:

Craftsmanship

Thursday, November 13, 2008

The Path of Least Resistance

While fighting with monsters of legacy code, I have kept thinking of all the possible reasons why developers would end up building such a man-made hell. My capacity to analyze the situation and figure out possible causes is limited to the knowledge of the external forces that these developers where exposed to. For what was happening inside of them, I am limited to build analogies after looking at my own battles and shortcomings.

I have been trying to distill a unique question. Here it is: why would a developer choose to the walk the path of least resistance?

Though skill, experience and professional standards are factors at play, I do not think they can explain the whole picture. Deadline pressure certainly encourage to cut corners and follow the easiest and dirtiest path. But the crux of the problem is not there. It is in the resistance.

What is this resistance anyway? It is multiform and hard to characterize. Any developer surely has felt at least once the slight despair it entails: you want to progress and get blocked by a wall of resistance that is not insurmountable but would incur a great cost in term of work disturbance.

Here are a few example of the outcome produced by such a resistance:

Taking intelligent decisions while being goal driven is hard. Everybody knows that the path of least resistance is rarely the best one. Alas, we walk it and, I suspect, not by laziness but by renouncement.

I have been trying to distill a unique question. Here it is: why would a developer choose to the walk the path of least resistance?

Though skill, experience and professional standards are factors at play, I do not think they can explain the whole picture. Deadline pressure certainly encourage to cut corners and follow the easiest and dirtiest path. But the crux of the problem is not there. It is in the resistance.

What is this resistance anyway? It is multiform and hard to characterize. Any developer surely has felt at least once the slight despair it entails: you want to progress and get blocked by a wall of resistance that is not insurmountable but would incur a great cost in term of work disturbance.

Here are a few example of the outcome produced by such a resistance:

- Teams entrenched behind layers of management feel encouraged not to wait for the others to do something that they need and end-up building sub-optimal solutions with what they have now.

- High cost of database changes can drive developers to extremes like the reuse of existing fields, the use of inadequate field types or the storage of serialized objects in BLOBs when a few extra columns would have done the trick.

- Long feedback loops between a code change and the result of testing orient developers towards implementing new features with minimal changes to existing code, which ends up with code duplication, spaghetti plates and design-less applications.

Taking intelligent decisions while being goal driven is hard. Everybody knows that the path of least resistance is rarely the best one. Alas, we walk it and, I suspect, not by laziness but by renouncement.

Labels:

Craftsmanship

Tuesday, November 11, 2008

Just Read: Facts and Fallacies of Software Engineering

Sometimes, when I read a book, I look at the publication date and wonder out loud: "what on earth was I doing this year to miss this one?". This is exactly what happened while reading this book from by Robert L. Glass. What on earth was I doing in 2002 to have missed such an excellent book?

Sometimes, when I read a book, I look at the publication date and wonder out loud: "what on earth was I doing this year to miss this one?". This is exactly what happened while reading this book from by Robert L. Glass. What on earth was I doing in 2002 to have missed such an excellent book?Known as the F-Book, after its original title proposition ("Fifty-Five Frequently Forgotten Fundamental Facts (and a Few Fallacies) about Software Engineering", which thankfully was not kept by the editor), this dense book is truly the sum of all truths and untruths about software engineering.

This book is really invaluable as an aid for debunking hype of all sorts and performing reality checks on tools and methods that oftentimes are dumped on software engineers with the promise of a brighter future (that never materializes).

With half a century in the field, Robert has seen it all and heard it all already. If you think your problems or questions are new or unique, then read this book and keep it, because you will have to come back to it and quote it whenever you will need to challenge a snake-oil vendors or an ivory tower methodologists (pretty much like you do with the anti-patterns catalog for development mispractices).

Labels:

Readings

Monday, November 10, 2008

Silver Still Very Light

I have just switched to the latest version of Moonlight, the port of Microsoft Silverlight on Linux, and the least I can say is that things have significantly progressed since the last time I tried.

So far, I have found only one site of use whose Silverlight application runs fine on Linux: the Ryanair destination map. Though the plug-in reports a rendering speed of close to 100 FPS, the zooming and panning is still way behind what pure JavaScript or Flash applications provide. But it is very impressive already.

The only problem seems to reside in the Silverlight detection algorithm used by most of the sites. Most of them give me the Redmond finger:

... while Moonlight is there and ready to chew XAML and render it! Too bad, I would like to see more of these sites and keep track on how this runner up technology will compete with the ones already in place.

At the end of the day, I have no doubt Silverlight/Moonlight can become a credible alternative to the current RIA browser-side technologies. My main concern is that sloppy developers may create Silverlight-powered sites that will only work with a limited set of platforms (browsers, OSes), which would be a regression from the current state of the web nation.

So far, I have found only one site of use whose Silverlight application runs fine on Linux: the Ryanair destination map. Though the plug-in reports a rendering speed of close to 100 FPS, the zooming and panning is still way behind what pure JavaScript or Flash applications provide. But it is very impressive already.

The only problem seems to reside in the Silverlight detection algorithm used by most of the sites. Most of them give me the Redmond finger:

... while Moonlight is there and ready to chew XAML and render it! Too bad, I would like to see more of these sites and keep track on how this runner up technology will compete with the ones already in place.

At the end of the day, I have no doubt Silverlight/Moonlight can become a credible alternative to the current RIA browser-side technologies. My main concern is that sloppy developers may create Silverlight-powered sites that will only work with a limited set of platforms (browsers, OSes), which would be a regression from the current state of the web nation.

Saturday, November 08, 2008

Where the hell is... Alineo?

After Matt and his little dance, my brother in law, of VoilaSVN fame, has decided to go freelining worldwide.

Two episodes so far: the classic grand Canyon and the way more exotic Luxembourg.

Enjoy.

Two episodes so far: the classic grand Canyon and the way more exotic Luxembourg.

Enjoy.

Labels:

Off-topic

Friday, November 07, 2008

Reality Check Levels

I am in a "question everything" phase. Maybe it is a side-effect of reading the excellent F-book (review coming soon) or the outcome of learning about the software half-life hypothesis? All in all, I am more and more dubious about what I read and hear about in the field of software. I have grown tired of half-baked ideas (including mine) that present possibilities as realities and niche solutions as widely applicable ones.

It seems this is natural: smart people have started to change their views on important subjects. Since I am not that smart, I would like reviewers of articles and publications to add something like the color-coded terrorist threat level to indicate the reality-check level of what I am reading.

That could be something like:

Like the threat level, this scale is coarse and over simplistic. Would you like it anyway? Would you had more levels?

It seems this is natural: smart people have started to change their views on important subjects. Since I am not that smart, I would like reviewers of articles and publications to add something like the color-coded terrorist threat level to indicate the reality-check level of what I am reading.

That could be something like:

RC0: This is highly hypothetical material. Mainly works in the vendor's environment, on the open source committer's laptop or in the researcher's lab.

RC1: This plays well in the F100 or governmental field. Complex committee-driven years-long BDUF-minded expensive concepts can be applied here. But nowhere else.

RC3: This is the field of pragmatists. The ideas, methodologies or products are based on simple, well-established and proven concepts.

Like the threat level, this scale is coarse and over simplistic. Would you like it anyway? Would you had more levels?

Labels:

Fun

Thursday, November 06, 2008

Legacy Tests

In Working Effectively with Legacy Code, Michael Feathers states that, for him, "legacy code is simply code without tests". As he says it himself, he has "gotten some grief for this definition". I can understand why.

I have come to deal with some legacy code monsters recently and came to realize that this code had tests written for it. So could it be that this code was not legacy after all?

After a closer inspection, the truth finally came out:

Else you will end up with nearly worthless legacy tests.

I have come to deal with some legacy code monsters recently and came to realize that this code had tests written for it. So could it be that this code was not legacy after all?

After a closer inspection, the truth finally came out:

- the test coverage is very low (less than 30%),

- the tests explore counter-intuitive paths (for example, just the unhappy ones),

- the tests themselves are monstrous (created in what looks like a copy/paste spree),

- they overuse mocks in a lax manner (many are not verified at tear down),

- they do not verify the complete state after execution (no validation of indirect outputs like request and session attributes),

- and, corollary of the legacy framework the code targets (EJB 2), full correctness can only be proven when deployed in an application container.

Else you will end up with nearly worthless legacy tests.

Labels:

Craftsmanship,

Testing

Tuesday, November 04, 2008

Two third of a Mule in Action

I am happy to report that two third of Mule in Action is now available from Manning's Early Access Program.

If you were deferring buying the book, now is a great time to part from a few bucks and get it! Indeed, you will find in these 280 pages a lot of practical knowledge about Mule that John and I have put together for your utmost enjoyment!

And just to thank you for spending your time reading this blog, I will share a little secret with you. If you use the aupromo25 promo code when buying the book, you will get 25% off the price. But please, don't repeat it.

Finally, just so you don't think it is all about money, you can always get the source code for the book for free. That is zero Dollar, which is also zilch Euro and no much more of any other currency you happen to have in your pocket.

If you were deferring buying the book, now is a great time to part from a few bucks and get it! Indeed, you will find in these 280 pages a lot of practical knowledge about Mule that John and I have put together for your utmost enjoyment!

And just to thank you for spending your time reading this blog, I will share a little secret with you. If you use the aupromo25 promo code when buying the book, you will get 25% off the price. But please, don't repeat it.

Finally, just so you don't think it is all about money, you can always get the source code for the book for free. That is zero Dollar, which is also zilch Euro and no much more of any other currency you happen to have in your pocket.

Saturday, November 01, 2008

JBound 1.1 is out

I have just released version 1.1 of JBound, my simple utility that performs boundary checks on domain model objects.

This new release features native support for more JDK data types and the possibility to register custom data types.

Consult the daunting one page user guide for more information.

This new release features native support for more JDK data types and the possibility to register custom data types.

Consult the daunting one page user guide for more information.

Friday, October 24, 2008

SpringSource's Elitism

SpringSource community is elitist and despises juniors. Here is the captcha I just go on their forums:

Why so much hatred? Seriously guys?

Why so much hatred? Seriously guys?

Why so much hatred? Seriously guys?

Why so much hatred? Seriously guys?

Labels:

Fun

Half Life And No Regrets

In the latest edition of the excellent IEEE Software magazine, the non less excellent Philippe Kruchten has conjectured about the existence of a five years half-life for software engineering ideas. That would mean that 50% of our current fads would be gone within five years.

Though not (yet) backed by any hard numbers, his experience (as mine and yours) suggest that this is most probably true.

All in all, the sheer idea of such an half-life decay pattern helps letting obsolete knowledge go without regret. It is fine to forget everything about EJBs and code with POJOs. JavaBeans are evil: you can come back to pure OO development. You can bury your loved-hated-O/R-mapper skillset in favor of writing efficient SQL. It is OK to drop the WS-Death* for REST (this one is purposefully provocative, if a WS-nazi reads this blog I am dead).

Unlearning things that were so incredibly painful to learn may feel like a waste but it is not. This half-life decay is in fact great news because it translates into less knowledge overload and more focus on what matters... or, unfortunately, the adoption of new short-lived fads! And the latter is the scariest part. How much about what we are currently learning will survive more than five years?

Nothing platform or even language specific, I would say. But sound software engineering practices will remain: building elevated personal and professional standards, treasuring clean code, challenging our love for hype with the need for pragmatism...

This is where our today's investment must be: in the things that last.

Though not (yet) backed by any hard numbers, his experience (as mine and yours) suggest that this is most probably true.

All in all, the sheer idea of such an half-life decay pattern helps letting obsolete knowledge go without regret. It is fine to forget everything about EJBs and code with POJOs. JavaBeans are evil: you can come back to pure OO development. You can bury your loved-hated-O/R-mapper skillset in favor of writing efficient SQL. It is OK to drop the WS-Death* for REST (this one is purposefully provocative, if a WS-nazi reads this blog I am dead).

Unlearning things that were so incredibly painful to learn may feel like a waste but it is not. This half-life decay is in fact great news because it translates into less knowledge overload and more focus on what matters... or, unfortunately, the adoption of new short-lived fads! And the latter is the scariest part. How much about what we are currently learning will survive more than five years?

Nothing platform or even language specific, I would say. But sound software engineering practices will remain: building elevated personal and professional standards, treasuring clean code, challenging our love for hype with the need for pragmatism...

This is where our today's investment must be: in the things that last.

Labels:

Craftsmanship

Just Read: The Productive Programmer

In this concise book, Neal Ford shares his hard gained real world experience with software development. Because he is consulting for the best, his experience is diverse and rich, hence definitively worth reading.

This book feels a lot like "The Pragmatic Programmer Reloaded": same concepts with updated samples and situations. It is a perfect read for any junior developer, as the book will instill the right mindset. It is also a recommended book for any developer who struggles with his own practice (all of us, right?) and want to engage the next gear.

Personally, having attended some of Neal's conference speeches and being somewhat an old dog in the field of software, I kind of knew where he would take me in this book hence I did not have any big surprise nor made any huge discovery while reading it. This said, three chapters are truly outstanding and can talk to experienced geeks: "Ancient Philosophers", "Question Authority" and "Polyglot Programming".

Labels:

Readings

Saturday, October 18, 2008

Two-Minutes Scala Rules Engine

Scala is really seductive. To drive my learning of this JVM programming language, I thought I should go beyond the obvious experiments and try to tackle a subject dear to my heart: building a general purpose rules engine.

Of course, the implementation I am going to talk about here is naive and inefficient but the rules engine is just a pretext here: this is about Scala and how it shines. And it is also a subset of what Scala is good at: I mainly scratch the surface by focusing on pattern matching and collections handling.

The fact representation I use is the same than the one used by RuleML: a fact is a named relationship between predicates. For example, in "blue is the color of the sky", color is the relationship between blue and sky. The rules engine matches these facts on predefined patterns and infers new facts out of these matches.

In Scala, I naturally opted for using a case class to define a fact, as the pattern matching feature it offers is all what is needed to build inference rules:

Normally you do not need to implement your own hashcode functions, as Scala's default ones are very smart about your objects' internals, but there is a known issue with case classes that forced me to do so.

For rules, I opted to have them created as objects constrained by a particular trait:

The method of note in this trait is run which accepts a list of n facts and returns zero (None) or one (Some) new fact ; with n equals to the value of the arity function.

So how is the list of facts fed to the rule run method created? This is where the naive and inefficient part comes into play: each rule tells the engine the number of facts it needs to receive as arguments when called. The engine then feeds the rule with all the unique combinations of facts that match its arity.

This is an inefficient but working design. The really amazing part here is how Scala shines again by providing all what is required to build these combinations in a concise manner. This is achieved thanks to the for-comprehension mechanism as shown here:

Notice how this code is generic and knows nothing about the objects it combines. With this combination function in place, the engine itself is a mere three functions:

With this in place, let us look at the facts and rules I created to solve the classic RuleML discount problem (I added an extra customer). Here is the initial facts definition:

And here are the two rules:

With this in place, calling:

produces this new fact base with the correct discount granted to good old Peter Miller but not to the infamously cheap John Doe:

This is truly a two minutes rules engine: don't ship it to production! Thanks to the standard pattern matching and collection handling functions in Scala, this was truly a bliss to develop. On top of that, Scala's advanced type inference mechanism and very smart and explicit compiler take strong typing to another dimension, one that could seduce ducks addicts.

So why does Scala matter? I believe the JVM is still the best execution platform available as of today. It is stable, fast, optimized, cross-platform and manageable. An alternative byte-code compiled language like Scala opens the door to new approaches in software development on the JVM.

Closing note: an interesting next step will consist in parallelizing the engine by using Scala actors. But this is another story...

Of course, the implementation I am going to talk about here is naive and inefficient but the rules engine is just a pretext here: this is about Scala and how it shines. And it is also a subset of what Scala is good at: I mainly scratch the surface by focusing on pattern matching and collections handling.

The fact representation I use is the same than the one used by RuleML: a fact is a named relationship between predicates. For example, in "blue is the color of the sky", color is the relationship between blue and sky. The rules engine matches these facts on predefined patterns and infers new facts out of these matches.

In Scala, I naturally opted for using a case class to define a fact, as the pattern matching feature it offers is all what is needed to build inference rules:

Normally you do not need to implement your own hashcode functions, as Scala's default ones are very smart about your objects' internals, but there is a known issue with case classes that forced me to do so.

For rules, I opted to have them created as objects constrained by a particular trait:

The method of note in this trait is run which accepts a list of n facts and returns zero (None) or one (Some) new fact ; with n equals to the value of the arity function.

So how is the list of facts fed to the rule run method created? This is where the naive and inefficient part comes into play: each rule tells the engine the number of facts it needs to receive as arguments when called. The engine then feeds the rule with all the unique combinations of facts that match its arity.

This is an inefficient but working design. The really amazing part here is how Scala shines again by providing all what is required to build these combinations in a concise manner. This is achieved thanks to the for-comprehension mechanism as shown here:

Notice how this code is generic and knows nothing about the objects it combines. With this combination function in place, the engine itself is a mere three functions:

With this in place, let us look at the facts and rules I created to solve the classic RuleML discount problem (I added an extra customer). Here is the initial facts definition:

And here are the two rules:

With this in place, calling:

produces this new fact base with the correct discount granted to good old Peter Miller but not to the infamously cheap John Doe:

This is truly a two minutes rules engine: don't ship it to production! Thanks to the standard pattern matching and collection handling functions in Scala, this was truly a bliss to develop. On top of that, Scala's advanced type inference mechanism and very smart and explicit compiler take strong typing to another dimension, one that could seduce ducks addicts.

So why does Scala matter? I believe the JVM is still the best execution platform available as of today. It is stable, fast, optimized, cross-platform and manageable. An alternative byte-code compiled language like Scala opens the door to new approaches in software development on the JVM.

Closing note: an interesting next step will consist in parallelizing the engine by using Scala actors. But this is another story...

Labels:

Scala

Wednesday, October 08, 2008

Database Cargo Cult

Today, I have attended an epic product walk-through. When the demonstrator came to explore the database of the application and opened the stored procedures directory, the audience was aghast at the shocking display of hundreds of these entities. I have been told it is the canonical way of developing applications in the Microsoft world. It is well possible, as it creates a favorable vendor coupling, but, to me, it is more a matter of database cargo cult.

The fallacy of the database as an application tier is only equated by the folly of using it as an integration platform. Both approaches create a paramount technical debt that takes many years to pay, if it is ever paid. Why? Because both approaches lack the abstraction layer that you need to create loosely coupled systems. Why again? Because both approaches preclude any sound testing strategy.

I have already talked about what I think are valid use cases for stored procedures. You do not need to believe me. Check what my colleague Tim has to say about it. And you do not need to believe him either, but at least read what Neal Ford says about it.

If you are still not convinced, then I have for you the perfect shape for your diagrams:

1BDB stands for one big database. And if you are in a database cargo cult, you already know why it is in flame.

1BDB stands for one big database. And if you are in a database cargo cult, you already know why it is in flame.

The fallacy of the database as an application tier is only equated by the folly of using it as an integration platform. Both approaches create a paramount technical debt that takes many years to pay, if it is ever paid. Why? Because both approaches lack the abstraction layer that you need to create loosely coupled systems. Why again? Because both approaches preclude any sound testing strategy.

I have already talked about what I think are valid use cases for stored procedures. You do not need to believe me. Check what my colleague Tim has to say about it. And you do not need to believe him either, but at least read what Neal Ford says about it.

If you are still not convinced, then I have for you the perfect shape for your diagrams:

1BDB stands for one big database. And if you are in a database cargo cult, you already know why it is in flame.

1BDB stands for one big database. And if you are in a database cargo cult, you already know why it is in flame.

Labels:

Craftsmanship

Thursday, October 02, 2008

Jolting 2008

The doors are now open for the 2008 Jolt Product Excellence Awards nominations.

If you have a great book or a cool product that has been published or had a significant version release in 2008, then do not wait any longer and enter the competition!

Oh, did I mention that early birds and open-source non-profit organizations get a discount?

If you have a great book or a cool product that has been published or had a significant version release in 2008, then do not wait any longer and enter the competition!

Oh, did I mention that early birds and open-source non-profit organizations get a discount?

Labels:

DDJ

Wednesday, October 01, 2008

Just Read: Working effectively with legacy code

This book from Michael C. Feathers came at a perfect moment in my software developer's life, as I started to read it right before I came to work on a legacy monstrosity.

The book is well organized, easy to ready and full of practical guidelines and best practices for taming the legacy codebases that are lurking out there.

I really appreciated Michael's definition of legacy code: for him "it is simply code without tests". And, indeed, untested code is both the cause and the characteristic of legacy code.

Near the end of the book, Michael has written a short chapter titled "We feel overwhelmed" that I have found encouraging and inspiring. Yes, working with legacy code can actually be fun if you look at it on the right side. My experience in this domain is that increasing test coverage is elating, deleting dead code and inane comments is bliss and seeing design emerge where only chaos existed is ecstatic.

Conclusion: read this book if you are dealing with today's legacy code or if you do not want to build tomorrow's.

Labels:

Craftsmanship,

Readings

Tuesday, September 30, 2008

Can Mule Spring further?

Just wondering.

Mule 2 is already very Spring-oriented, or at least very Spring-friendly. Will Mule's distribution, which is currently a nice set of Maven-build artifacts, evolve to a set of OSGI bundles a la Spring for version 3?

If MuleSource follows that path and distributes Spring Dynamic Modules, that would allow people to run on the SpringSource dm Server, if they want it, and benefit from sweetnesses like hot service replacement.

I doubt that Mule could use SpringSource dm Server as a default platform unless they clear up what I think could be licensing incompatbilities. But end users could make that choice and put the two Sources, Mule and Spring, together.

Just wondering.

Mule 2 is already very Spring-oriented, or at least very Spring-friendly. Will Mule's distribution, which is currently a nice set of Maven-build artifacts, evolve to a set of OSGI bundles a la Spring for version 3?

If MuleSource follows that path and distributes Spring Dynamic Modules, that would allow people to run on the SpringSource dm Server, if they want it, and benefit from sweetnesses like hot service replacement.

I doubt that Mule could use SpringSource dm Server as a default platform unless they clear up what I think could be licensing incompatbilities. But end users could make that choice and put the two Sources, Mule and Spring, together.

Just wondering.

Wednesday, September 24, 2008

The Build Manifesto

My ex-colleague Owen has just introduced the Build Manifesto. I invite you to read it and share your thoughts with him.

Labels:

Craftsmanship,

Tools

Tuesday, September 23, 2008

Soon Serving Spring

I finally had a chance to step beyond trivialities with the SpringSource dm Server.

To make things clear, this server is all about giving life to Spring Dynamic Modules (DM). Spring DM is not new but putting this technology in action was awkward: you had to deal with an embedded OSGI container and figure a lot of things out. The SpringSource dm Server provides an integrated no-nonsense environment where dynamic modules live a happy and fruitful life.

The benefits of dynamic modules are well known, and mostly derived from the OSGI architecture. The goodness Spring adds on OSGI is the clean model for service declaration and referencing. This is priceless as it enables a true highly cohesive and loosely coupled application architecture within a single JVM. If you come from a world of EJBs and troubled class loaders, this is the holy grail.

To exercise this sweet platform beyond the obvious, I have built the prototype of a JMS-driven application. The architecture was pretty simple: a bundle using Message Driven POJOs to consume a Sun OpenMQ destination, which then informs clients of new messages through a collection of listener service references. The idea was to allow the deployment of new clients or the hot replacement of a particular client at runtime. Verdict? It just works. The SpringSource dm Server goes to great length to isolate you from bundles going up and down (depending on the cardinality of your references to OSGI services, you may still get a "service non available" exception).

What I really enjoyed was the possibility to use bundles of Spring wired beans as first class citizen applications. Gone is the need for wrapping Spring in a web application to get a proper life cycle... POJOs rule!

Version RC2 of the server still has some rough edges (for example, the deployment order in the pickup directory is fuzzy and the logging messages are badly truncated and ill formatted) but they are minor compared to the amount of work done on the platform itself.

For me, the main challenge remains in making this server truly production-grade. Here are some points that I think need improvement:

I you are a latecomer like me and have not started to seriously investigate this technology, now is the time.

To make things clear, this server is all about giving life to Spring Dynamic Modules (DM). Spring DM is not new but putting this technology in action was awkward: you had to deal with an embedded OSGI container and figure a lot of things out. The SpringSource dm Server provides an integrated no-nonsense environment where dynamic modules live a happy and fruitful life.

The benefits of dynamic modules are well known, and mostly derived from the OSGI architecture. The goodness Spring adds on OSGI is the clean model for service declaration and referencing. This is priceless as it enables a true highly cohesive and loosely coupled application architecture within a single JVM. If you come from a world of EJBs and troubled class loaders, this is the holy grail.

To exercise this sweet platform beyond the obvious, I have built the prototype of a JMS-driven application. The architecture was pretty simple: a bundle using Message Driven POJOs to consume a Sun OpenMQ destination, which then informs clients of new messages through a collection of listener service references. The idea was to allow the deployment of new clients or the hot replacement of a particular client at runtime. Verdict? It just works. The SpringSource dm Server goes to great length to isolate you from bundles going up and down (depending on the cardinality of your references to OSGI services, you may still get a "service non available" exception).

What I really enjoyed was the possibility to use bundles of Spring wired beans as first class citizen applications. Gone is the need for wrapping Spring in a web application to get a proper life cycle... POJOs rule!

Version RC2 of the server still has some rough edges (for example, the deployment order in the pickup directory is fuzzy and the logging messages are badly truncated and ill formatted) but they are minor compared to the amount of work done on the platform itself.

For me, the main challenge remains in making this server truly production-grade. Here are some points that I think need improvement:

- Consoles all the way down: You have to juggle between the Web Admin Console, the Equinox OSGI console and JConsole to perform different bundle management operations. The SpringSource dm Server needs a single console to rule them all.

- The configuration management is unclear: I ended up using an extra module, the Pax ConfigManager, to load external properties file in the OSGI Configuration Admin service. The SpringSource dm Server needs a default tool for this before going to production.

- A proper service Shell script is missing: you just get a start and a stop script, which is not enough for production. A unique script with the classic start/restart/stop/status commands would be way better. If the matter of licensing was not such a hot subject in SpringSource currently, I would suggest them to use Tanuki's Wrapper as their boot script.

I you are a latecomer like me and have not started to seriously investigate this technology, now is the time.

Sunday, September 21, 2008

Final painted all over it

In my previous post, I mentioned that I let Eclipse add final statements everywhere in my code. I initially was reluctant to this idea.

Then I came to work on a piece of code which was heavily dealing with string processing. The temptation to re-use a variable and assign a different string to it several times, as the processing was happening, was really high. Using the final keyword forced me to declare a new variable each time. The great benefit was that I had to create new variable name each time, thus making the code clearer in its intention.

Hence, using only final variables makes the code stricter and cleaner. Moreover it is a safety net for the days when I am sloppy: final variables give me the big no-no if I feel inclined to repurpose one of them!

In Clean Code Uncle Bob advocates against such a systematic use of the final keyword for the reason it creates too much visual clutter. I agree with him though you quickly tend to visually ignore these keywords. Scala has solved the cluttering issue elegantly thanks to specific declaration keywords instead of a modifier (val for values and var for variable).

Did I just say Scala?

Then I came to work on a piece of code which was heavily dealing with string processing. The temptation to re-use a variable and assign a different string to it several times, as the processing was happening, was really high. Using the final keyword forced me to declare a new variable each time. The great benefit was that I had to create new variable name each time, thus making the code clearer in its intention.

Hence, using only final variables makes the code stricter and cleaner. Moreover it is a safety net for the days when I am sloppy: final variables give me the big no-no if I feel inclined to repurpose one of them!

In Clean Code Uncle Bob advocates against such a systematic use of the final keyword for the reason it creates too much visual clutter. I agree with him though you quickly tend to visually ignore these keywords. Scala has solved the cluttering issue elegantly thanks to specific declaration keywords instead of a modifier (val for values and var for variable).

Did I just say Scala?

Labels:

Craftsmanship

Saturday, September 20, 2008

Microtechniques: a little more conversation?

Interestingly, just when I was about to write about the pleasure of not typing many things while coding in Eclipse, J. B. Rainsberger just posted about the potential importance of typing fast. I guess this is the nature of the webernet: everything and its opposite at the same time, and leave it to the readers to sort things out!

I was reflecting about my coding habits with Eclipse after a thread in the Java forums, where a poster was complaining about the continuous compilation feature, prompted some internal debates (my, myself and I often disagree). Interestingly, this feature has been touted by others as a key one.

I personally like the continuous feedback. The rare time I write C# code in SharpDevelop, it takes me a while to remember that I have to tell the IDE to look at my code. First, I do not like to have to tell my friend the computer to do things. Second, I want the continuous feedback. I do not mind if the compiler is temporarily confused because my syntax is momentarily broken. I want the early warning that this feature gives me.

In fact, continuous compilation creates a neat state of flow, where you actually engage into a conversation with the IDE. And this is where my stance about not typing comes into play. I do not type class names, but merely each capital letter of its name and let the code assistance propose me something that fits. I do not write variable declarations: I either assign it automatically or extract it from existing code. I less and less write method declarations but extract them. I allow Eclipse to add keywords (like final) and attributes (like @Override) everywhere it thinks necessary. I leave it up to the code formatter to clean up my mess and make it stick with the coding conventions in place.

All these automatic features do not dumb me down. They establish a rich conversation between my slow and fuzzy brain and the fast and strict development environment. The compiler tells me: "ok, this is what I understand" and the IDE tells me: "alright, here is what I propose". And then I correct course or keep going.

So maybe we need a little less typing and a little more conversation?

I was reflecting about my coding habits with Eclipse after a thread in the Java forums, where a poster was complaining about the continuous compilation feature, prompted some internal debates (my, myself and I often disagree). Interestingly, this feature has been touted by others as a key one.

I personally like the continuous feedback. The rare time I write C# code in SharpDevelop, it takes me a while to remember that I have to tell the IDE to look at my code. First, I do not like to have to tell my friend the computer to do things. Second, I want the continuous feedback. I do not mind if the compiler is temporarily confused because my syntax is momentarily broken. I want the early warning that this feature gives me.

In fact, continuous compilation creates a neat state of flow, where you actually engage into a conversation with the IDE. And this is where my stance about not typing comes into play. I do not type class names, but merely each capital letter of its name and let the code assistance propose me something that fits. I do not write variable declarations: I either assign it automatically or extract it from existing code. I less and less write method declarations but extract them. I allow Eclipse to add keywords (like final) and attributes (like @Override) everywhere it thinks necessary. I leave it up to the code formatter to clean up my mess and make it stick with the coding conventions in place.

All these automatic features do not dumb me down. They establish a rich conversation between my slow and fuzzy brain and the fast and strict development environment. The compiler tells me: "ok, this is what I understand" and the IDE tells me: "alright, here is what I propose". And then I correct course or keep going.

So maybe we need a little less typing and a little more conversation?

Labels:

Craftsmanship,

Tools

Tuesday, September 16, 2008

Just Read: Clean Code

I have been expecting this new book from Uncle Bob for quite a while so, as soon as I have got my copy, I rushed through it!

If you have no idea about who is this guy named Robert C. Martin and mainly expect people to "sent you teh codez", then you have to read this book. This will not transmogrify you into a craftsman but, at least, you will get a fair measure of the journey you still have to go through and be pointed in the right direction.

If you are familiar with Uncle Bob's writings and attend his conference talks, there will be no new concepts for you in this book. It will still be an insightful reading because of the extensive code samples and the refactoring sessions where you can actually follow the train of thoughts, and the actions they entail, as if you were in the master's head. It's like being John Malkovitch, but a little geekier.

The book itself is somewhat structurally challenged and lacks a little consistency, from a reader standpoint.

But who cares? As long as you can read this kind of stuff:

Clean code is not written by following a set of rules. You don't become a software craftsman by learning a list of heuristics. Professionalism and craftsmanship come from values that drive disciplines.... is the form so important?Robert C. Martin, Clean Code

Labels:

Readings

Thursday, September 11, 2008

Colliding Cow Bells

I found that the original Large Hadron Collider Rap was lacking cowbells. Here is the reviewed version that is now fully compliant with Christopher Walken and Blue Oyster Cult's standards:

Friday, September 05, 2008

Code Onion

The code of an application is like an onion, which is why it may make you cry sometimes. It looks like this:

The core

The core

- This is the code domain where unit test coverage is the highest, hence refactoring is free. This code is as clean as it can be. This is the comfortable place where everybody wants to work in. Thanks to high test coverage, the feedback loop is short and fast so morale and courage are high when it comes to touch anything in this area.

- This is where most of the compromises happen. Dictated by the use of frameworks or application containers, code becomes invaded with inane accessors, class names end up hard-coded in configuration files, out-of-code indirections (like JNDI based look-ups) weaken the edifice. Unit and integration tests help building reasonable confidence but some refactoring can induce issues that can only be detected when deploying in a target container. This slow and long feedback loop reduces the opportunities to make things better in this area.

- This is the outside world, where the rift between the world of code and the harsh reality of life resides. Databases inhabit this place and provide great services but they mismatch with objects. The network is there too, always happy to teach your application pesky lessons about latency, droped packets or broken pipes. Worst of all, users (yes, Tron, they exist) have invaded this area: from there they will constantly find creative ways to abuse your innocent code.

Labels:

Craftsmanship,

Fun

ESB Testing Strategies with Mule

SOA World Magazine has just put online my latest article, "ESB Testing Strategies with Mule", which they have published in their August issue.

Tuesday, September 02, 2008

That's silver, Jerry!

That's silver... for Mule In Action in the current Manning early access best seller roaster. And the book is only getting better thanks to our great reviewers!

That's silver... for Mule In Action in the current Manning early access best seller roaster. And the book is only getting better thanks to our great reviewers!

Labels:

Writings

Monday, September 01, 2008

Reading Calendar Irony

My six readers (according to Google Reader), will certainly appreciate the irony of my reading calendar.

Here are the two books I am currently reading:

Isn't this coincidence really fun?

Isn't this coincidence really fun?

Here are the two books I am currently reading:

Isn't this coincidence really fun?

Isn't this coincidence really fun?

Friday, August 29, 2008

Commit Risk Analysis

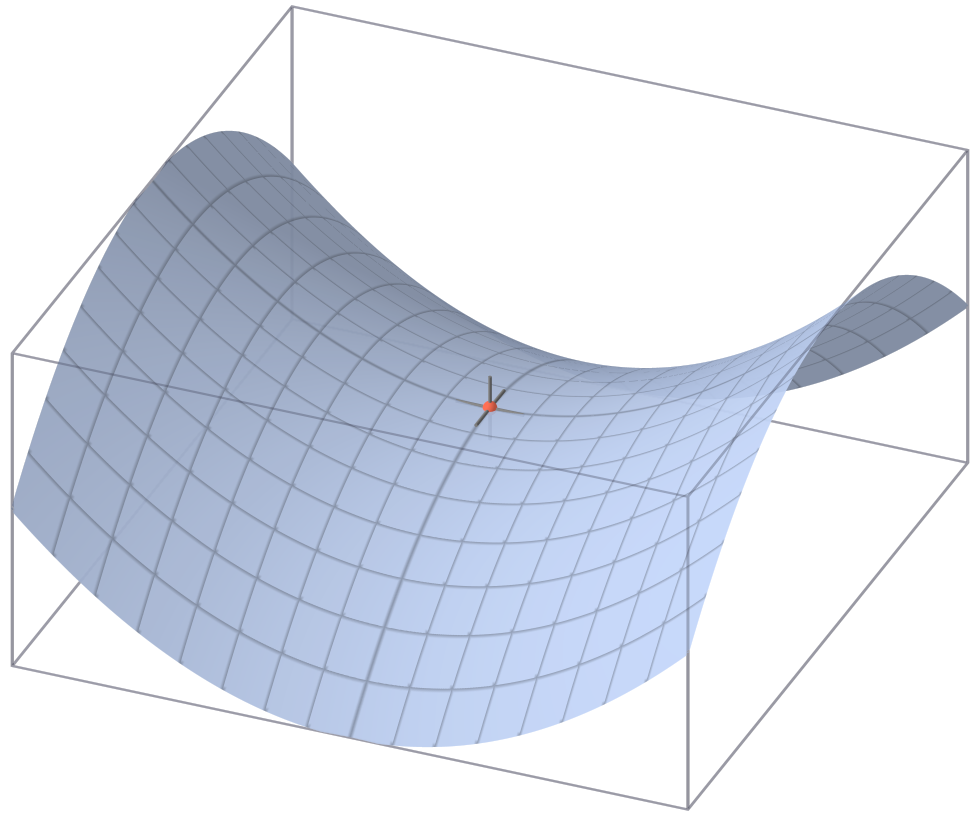

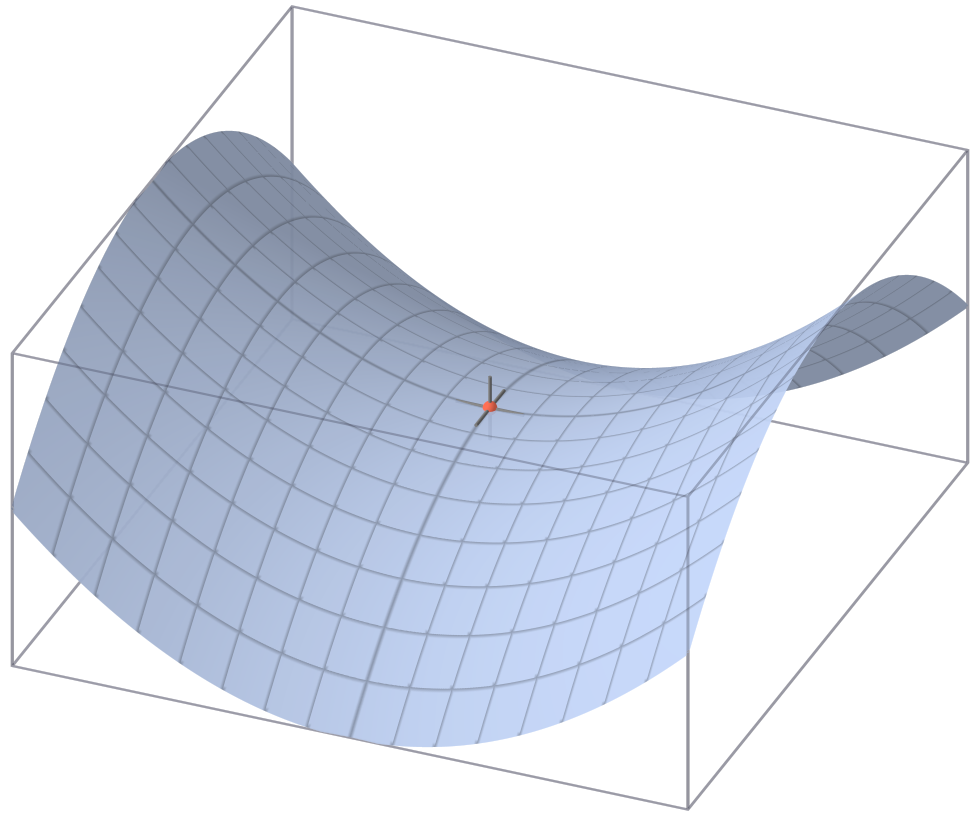

I like to compare the stability of a legacy application as a saddle point:

QA provides some lateral stability that prevents the legacy application (this little funny red dot) to fall sideways. But, still, a little push on the wrong side and down the hole the application will fall.

QA provides some lateral stability that prevents the legacy application (this little funny red dot) to fall sideways. But, still, a little push on the wrong side and down the hole the application will fall.

What the legacy code typically misses is unit tests. With unit tests, the saddle disappear and the code stability ends up in a pretty stable parabola:

In an ideal world, there will be no legacy code. In a little less ideal world, there will be legacy code but every time a developer would touch any class, he would first write unit tests to fully cover the code he is about to modify.

In an ideal world, there will be no legacy code. In a little less ideal world, there will be legacy code but every time a developer would touch any class, he would first write unit tests to fully cover the code he is about to modify.

Unfortunately, in our world, code is written by human beings. Human beings turned the original code into legacy code and human beings will maintain and evolve this legacy code. Hence there will be modifications on non unit tested code that will end up checked in. Inevitably, the little red ball will drift on the wrong side of the saddle.

I came to wonder what could be a way to estimate the risk that has been introduced by changes in a code base. Basically, the risk would be inversely proportional to the test coverage of the modified class.

I came with a very basic tool, Corian (Commit Risk Analyzer), that simply fetches the Cobertura coverage percentage for classes that were modified in Subversion for a particular number of days in the past.

Do you know of any method or tool for estimating the risk that a series of revisions could have introduced in a code base?

QA provides some lateral stability that prevents the legacy application (this little funny red dot) to fall sideways. But, still, a little push on the wrong side and down the hole the application will fall.

QA provides some lateral stability that prevents the legacy application (this little funny red dot) to fall sideways. But, still, a little push on the wrong side and down the hole the application will fall.What the legacy code typically misses is unit tests. With unit tests, the saddle disappear and the code stability ends up in a pretty stable parabola:

In an ideal world, there will be no legacy code. In a little less ideal world, there will be legacy code but every time a developer would touch any class, he would first write unit tests to fully cover the code he is about to modify.

In an ideal world, there will be no legacy code. In a little less ideal world, there will be legacy code but every time a developer would touch any class, he would first write unit tests to fully cover the code he is about to modify.Unfortunately, in our world, code is written by human beings. Human beings turned the original code into legacy code and human beings will maintain and evolve this legacy code. Hence there will be modifications on non unit tested code that will end up checked in. Inevitably, the little red ball will drift on the wrong side of the saddle.

I came to wonder what could be a way to estimate the risk that has been introduced by changes in a code base. Basically, the risk would be inversely proportional to the test coverage of the modified class.

I came with a very basic tool, Corian (Commit Risk Analyzer), that simply fetches the Cobertura coverage percentage for classes that were modified in Subversion for a particular number of days in the past.

Do you know of any method or tool for estimating the risk that a series of revisions could have introduced in a code base?

Labels:

Craftsmanship,

My OSS,

Testing,

Tools

Wednesday, August 27, 2008

Bauhaus & Software Development

It took me a while to realize this but I finally noticed the deep similitudes between software development and the Bauhaus school of design. My CS teacher and mentor, who was knowledgeable about almost everything, had a particular penchant for the Bauhaus: it only took me 16 years to grok why...

Quoting Wikipedia, "one of the main objectives of the Bauhaus was to unify art, craft, and technology". Is not this unification realized in software development? In fact, should not this unification be the basis of successful, satisfying and fulfilling endeavors in this field?

Try with this:

Or that:

And now with that:

Quoting Wikipedia, "one of the main objectives of the Bauhaus was to unify art, craft, and technology". Is not this unification realized in software development? In fact, should not this unification be the basis of successful, satisfying and fulfilling endeavors in this field?

Technology - This is the easiest one. Software development is obviously about technology, as the concrete manifestation of scientific and engineering progresses. The smaller the transistors, the denser the processors, the more powerful the computers, the happiest the software developers!

Craft - Uncle Bob speaks about it better than I could ever dream of. He has just proposed a fifth element for the Agile Manifesto:

Art - The connection between software development and art is often controversial. Here, I will let Kent Beck convince you, with an excerpt of Implementation Patterns:

Craft - Uncle Bob speaks about it better than I could ever dream of. He has just proposed a fifth element for the Agile Manifesto:

Craftsmanship over Execution

Most software development teams execute, but they don’t take care. We value execution, but we value craftsmanship more.

Art - The connection between software development and art is often controversial. Here, I will let Kent Beck convince you, with an excerpt of Implementation Patterns:

Aesthetics engage more of your brain than strictly linear logical thought. Once you have cultivated your sense of the aesthetics of code, the aesthetic impressions you receive of your code is valuable feedback about the quality of the code.

Try with this:

Or that:

And now with that:

Labels:

Craftsmanship

Tuesday, August 26, 2008

Just Read: Managing Humans

Michael Lopps' capacity to deconstruct and analyze every aspects of both software engineering management and nerd internal mechanics is simply outstanding.

This book is not only insightful and amusing, but is a looking glass where all the intricacies of humans' management get revealed.

A. Must. Read.

Labels:

Readings

Blog Inaction

Larry O'Brien said it better that I could ever formulate it, so here you go:

And here is what kept me and is still keeping me busy those days:

I've been busier than some metaphorical thing in some metaphorical place where things are really busy.

And here is what kept me and is still keeping me busy those days:

Monday, August 18, 2008

Silverfight

The Register is running what seems to be a balanced review of (the yet to come) Microsoft Silverlight (2.0).

It seems balanced because you have ten pros and ten cons, which might suggest that adopting Silverlight is merely a matter of taste (XAML is attractive) or politics (like for the NBC Olympics). But I think that, if you ponderate the different pros and cons, you might end-up with a balance that leans on a particular side (I let you guess which one).

The availability of designers' tools that runs on the Macintosh platform will certainly be critical if Microsoft wants to entice them out of the Macromedia world.

Similarly, the heroic efforts deployed in Moonlight to make Silverlight cross platform will be key to the overall success of this, otherwise proprietary, platform.

As of today, here is how a simple example comparing Silverlight and Flash runs on my machine:

Yep, this is a big empty white box with statistics about how fast Silverlight renders it in the status bar. If Microsoft is serious about dethroning Flash, which I am not entirely convinced of, they will have to go past this kind of... emptiness.

Yep, this is a big empty white box with statistics about how fast Silverlight renders it in the status bar. If Microsoft is serious about dethroning Flash, which I am not entirely convinced of, they will have to go past this kind of... emptiness.

It seems balanced because you have ten pros and ten cons, which might suggest that adopting Silverlight is merely a matter of taste (XAML is attractive) or politics (like for the NBC Olympics). But I think that, if you ponderate the different pros and cons, you might end-up with a balance that leans on a particular side (I let you guess which one).

The availability of designers' tools that runs on the Macintosh platform will certainly be critical if Microsoft wants to entice them out of the Macromedia world.

Similarly, the heroic efforts deployed in Moonlight to make Silverlight cross platform will be key to the overall success of this, otherwise proprietary, platform.

As of today, here is how a simple example comparing Silverlight and Flash runs on my machine:

Yep, this is a big empty white box with statistics about how fast Silverlight renders it in the status bar. If Microsoft is serious about dethroning Flash, which I am not entirely convinced of, they will have to go past this kind of... emptiness.

Yep, this is a big empty white box with statistics about how fast Silverlight renders it in the status bar. If Microsoft is serious about dethroning Flash, which I am not entirely convinced of, they will have to go past this kind of... emptiness.

Wednesday, August 13, 2008

Just Read: Implementation patterns

If you are an aficionado of formal pattern books, you might be disappointed by the latest book from Kent Beck. This book is more about a mentor sharing his experience than a succession of diagrams, code samples and rules for applying or not a particular pattern.

If you are an aficionado of formal pattern books, you might be disappointed by the latest book from Kent Beck. This book is more about a mentor sharing his experience than a succession of diagrams, code samples and rules for applying or not a particular pattern.In this book, Kent clearly took the decision to engage the reader in a direct manner: there is no fluff, just the nitty-gritty. Just years of experience and experiments summarized in less than 150 pages. I leave to your imagination to figure out how dense the book is. It is sometimes so rich that I came to wish that a little bit of code or a neat hand-drawn schema could be added here and there, just to make a particular pattern more edible for a slow brain like mine.

There is an intense tension in this book: I have been fulminating after reading some takes from Kent where he states counter intuitive approaches as far as defensive coding is concerned. And then I reached the last part ("Evolving Frameworks") and it striked me: so far in book, Kent was not coding for public APIs. And it striked me again: Kent is a master, he adapts his way of coding to the context.

Let Kent Keck talk to you: buy this short book and listen to what he wants to share with you.

Labels:

Readings

Monday, August 11, 2008

GMail Auto-resizing Rules!

Just a quick "thank you" to GMail's team for the new auto-resizing feature that makes the edit box use efficiently the available screen real estate.

This is good.

This is good.

Labels:

Google

Wednesday, August 06, 2008

Cuil Geared To Hidden Success

It took me a while to realize this but Cuil, the new flashy search engine that randomly displays porn and has a name that looks like the French word for an unspeakable part of the male anatomy, bears in its name the inevitable fate of a hidden success.

Let me explain. To succeed on the webernets, you need two Os in your domain name. Amazon made the risky choice of a M-separated-double-A and they surprinsingly do well, so far. But anyway, if we narrow down the field to search engines only, it is pretty obvious that the double-O is de rigueur for success.

So what on Earth did happen to the guys at Cuil? Well, you see, the trick is in the pronunciation. It is pronounced "cool". Here you go! The double-O, that was hidden in the domain name, becomes visible when you say it.

Consequently, a hidden double-O can only lead to a hidden success. Which is not a failure by the way.

Let me explain. To succeed on the webernets, you need two Os in your domain name. Amazon made the risky choice of a M-separated-double-A and they surprinsingly do well, so far. But anyway, if we narrow down the field to search engines only, it is pretty obvious that the double-O is de rigueur for success.

So what on Earth did happen to the guys at Cuil? Well, you see, the trick is in the pronunciation. It is pronounced "cool". Here you go! The double-O, that was hidden in the domain name, becomes visible when you say it.

Consequently, a hidden double-O can only lead to a hidden success. Which is not a failure by the way.

Labels:

Fun

Tuesday, July 29, 2008

Tainted Heroes?

I am perplexed by the recent Microsoft's {Open Source} Heroes campaign.

Believe me, I am working very hard to fight any bias against the stuff that comes from Redmond (just by respect to the great people they have and the cool stuff they are cooking in their labs). But for this campaign I can not help but smelling something fishy. Maybe because I am (lightly) active in the .NET open source community.

Anyway, for this campaign, Microsoft was granting a Hack Pack containing a trial copy of Windows Server 2008 and Visual Studio 2008 to open source developers all around the world. How is that going to help the .NET open source community? I do not have the faintest idea. But I can easily see how it can benefit Microsoft, especially when the trial period is over and the hero needs to buy a license.

I do believe there are real open source minded people at Microsoft. I also believe they are not allowed to come anywhere near the marketing department. They probably wear a special dress and have "to ring bells to warn people of their presence" too.

My open source experience in .NET land, compared to the one I have in the Java-lala-land, suggests that the last thing Microsoft developers need is yet another tool-lock-in scheme. I find .NET developers deeply engrossed with their IDE, sorry, with the IDE, to the extent that any project that is not formatted and designed for Visual Studio is a real challenge.

A few years ago, I made the choice to use SharpDevelop for developing NxBRE. The first versions of this IDE were pretty rough but I was immediately convinced by the fact a version of SharpDevelop was not tied to a particular version of .NET. This establishes the necessary distinction between the CLR and the SDK on one hand, and the development environment on the other hand.

So what about our heroes? Open source developers do not need time-trialed (or not) vendor specific tooling. They need 36 hours days and 2 extra arms, something for which Microsoft can not do anything. They also need a community of like-minded developers, something Microsoft should stop smothering and start fostering.

Believe me, I am working very hard to fight any bias against the stuff that comes from Redmond (just by respect to the great people they have and the cool stuff they are cooking in their labs). But for this campaign I can not help but smelling something fishy. Maybe because I am (lightly) active in the .NET open source community.

Anyway, for this campaign, Microsoft was granting a Hack Pack containing a trial copy of Windows Server 2008 and Visual Studio 2008 to open source developers all around the world. How is that going to help the .NET open source community? I do not have the faintest idea. But I can easily see how it can benefit Microsoft, especially when the trial period is over and the hero needs to buy a license.

I do believe there are real open source minded people at Microsoft. I also believe they are not allowed to come anywhere near the marketing department. They probably wear a special dress and have "to ring bells to warn people of their presence" too.

My open source experience in .NET land, compared to the one I have in the Java-lala-land, suggests that the last thing Microsoft developers need is yet another tool-lock-in scheme. I find .NET developers deeply engrossed with their IDE, sorry, with the IDE, to the extent that any project that is not formatted and designed for Visual Studio is a real challenge.

A few years ago, I made the choice to use SharpDevelop for developing NxBRE. The first versions of this IDE were pretty rough but I was immediately convinced by the fact a version of SharpDevelop was not tied to a particular version of .NET. This establishes the necessary distinction between the CLR and the SDK on one hand, and the development environment on the other hand.

So what about our heroes? Open source developers do not need time-trialed (or not) vendor specific tooling. They need 36 hours days and 2 extra arms, something for which Microsoft can not do anything. They also need a community of like-minded developers, something Microsoft should stop smothering and start fostering.

Sunday, July 27, 2008

Ouch of the day

This book is built on a rather fragile premise: that good code matters. I have seen too much ugly code make too much money to believe that quality of code is either necessary or sufficient for commercial success or widespread use. However, I still believe that quality of code matters even if it doesn't provide control over the future. Businesses that are able to develop and release with confidence, shift direction in response to opportunities and competition, and maintain positive morale through challenges and setbacks will tend to be more successful than businesses with shoddy, buggy code.

Labels:

Craftsmanship

Thursday, July 24, 2008

GR8 JRB MOO!

As this screen shot shows it, Mercury does not care if I have a 24" monitor. It is going to be 200 pixels and not one more, sir! Get over it, sir!

Has anybody tested this page? In the same vein: has anybody tested Outlook Web Access 2007? It behaves like a web mail from the early 2000s.

Why do expensive enterprise tools feel they must be sub-standard in their GUIs? Why is that that as soon as a product is deemed enterprise grade, the game changes and it suddenly is all about paying six figure license fees for something an open source project would feel embarrassed with.

Will vendors react or do they just try to milk the cow until it decides to kick them away? Or does the cow care at all? Maybe the cow likes arid and painful tools so they feel enterprisey?

Moo.