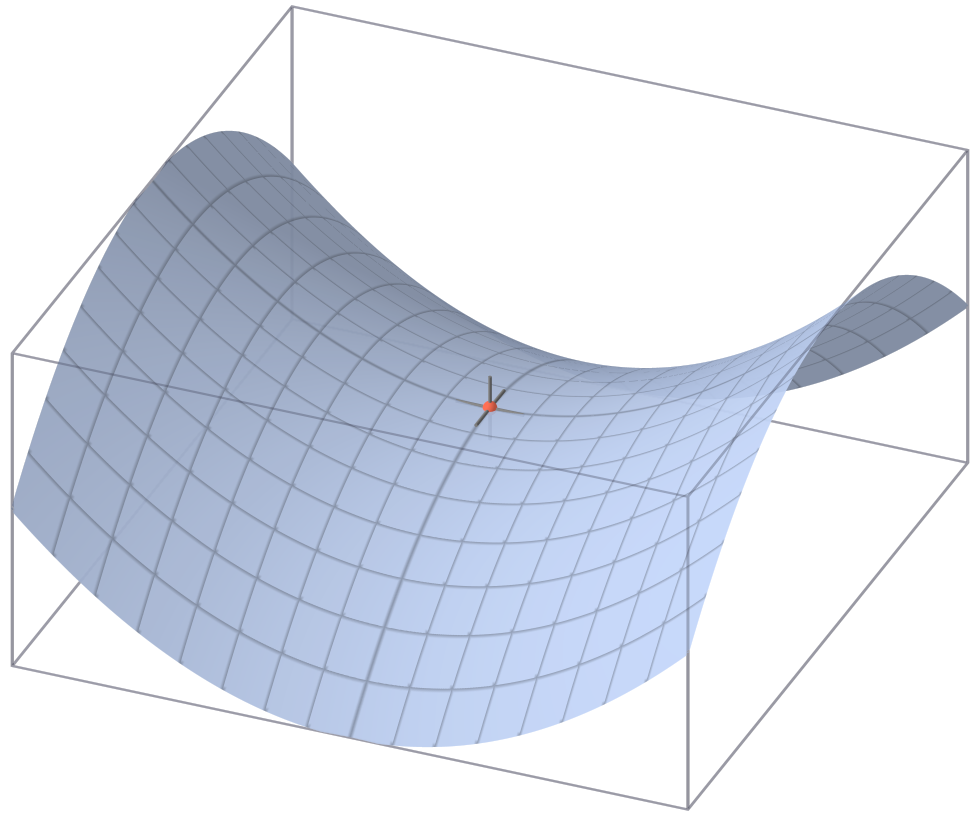

QA provides some lateral stability that prevents the legacy application (this little funny red dot) to fall sideways. But, still, a little push on the wrong side and down the hole the application will fall.

QA provides some lateral stability that prevents the legacy application (this little funny red dot) to fall sideways. But, still, a little push on the wrong side and down the hole the application will fall.What the legacy code typically misses is unit tests. With unit tests, the saddle disappear and the code stability ends up in a pretty stable parabola:

In an ideal world, there will be no legacy code. In a little less ideal world, there will be legacy code but every time a developer would touch any class, he would first write unit tests to fully cover the code he is about to modify.

In an ideal world, there will be no legacy code. In a little less ideal world, there will be legacy code but every time a developer would touch any class, he would first write unit tests to fully cover the code he is about to modify.Unfortunately, in our world, code is written by human beings. Human beings turned the original code into legacy code and human beings will maintain and evolve this legacy code. Hence there will be modifications on non unit tested code that will end up checked in. Inevitably, the little red ball will drift on the wrong side of the saddle.

I came to wonder what could be a way to estimate the risk that has been introduced by changes in a code base. Basically, the risk would be inversely proportional to the test coverage of the modified class.

I came with a very basic tool, Corian (Commit Risk Analyzer), that simply fetches the Cobertura coverage percentage for classes that were modified in Subversion for a particular number of days in the past.

Do you know of any method or tool for estimating the risk that a series of revisions could have introduced in a code base?