Did it need to be so high?JMS is a simple yet powerful API that allows developers to build asynchronous and loosely coupled systems pretty easily. In fact, it is so easy that its usage usually expands very rapidly in the IT landscape of a company until it hits a wall that is as high, austere and disabling as the Berlin's one was, namely: the

firewall.

JMS listeners rely on specific ports, usually dynamically assigned, something that usually prevents its usage through a firewall as administrators are reluctant to open ranges of addresses. Fortunately, there is a highway that goes through this wall: it is called HTTP. It has a particular traffic regulation as it is a one-way road that goes from the inside (the

Intranet zone) to the outside (the external DMZ that we will call the

Internet zone).

Mule to the rescue!This post demonstrates how to leverage

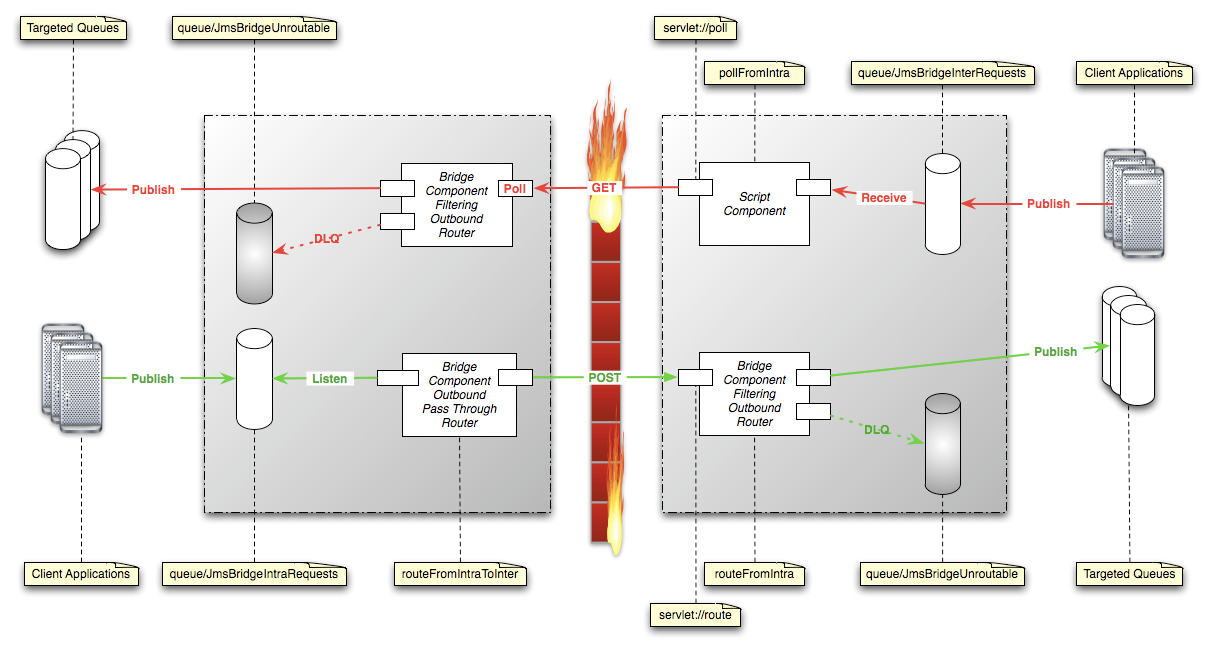

Mule, the open source ESB, for bridging JMS Queues that reside on the both sides of the firewall through this highway. The following deployment diagram details what is involved in this scenario: as you can see, Mule is not deployed as a standalone application but is embedded in a J2EE web application and deployed on a server. The reasons for this approach are multiple:

- System administrators can be reluctant to deploy new tools: deploying Mule as a web application on the standard J2EE server of your company alleviate this resistance.

- The inbound Queues used by the bridge can be hosted by the server itself, leading to a neat and consistent self contained component without any interaction to an external system.

- Using the servlet connector of Mule allows to leverage the well-known web stack provided by your favorite J2EE server.

When an application wants to send a message to another zone, it does it by sending the message to a dedicated queue in its own zone, which acts as a "

letter box". The routing itself is based on a specific JMS Message Property (named "

internet_destination" or

"intranet_destination") that contains the targeted queue name alias. This bridge uses alias instead of real servers and queue names to reduce coupling and to limit routing to pre-defined destinations.

The following diagram presents the different components involved in the bridge. Routing from the intranet to the Internet is shown in green ; the other direction is shown in red. The arrows are oriented in the direction of message flows, not in the direction of the call from a particular caller. The gray boxes represent the application servers involved in the bridge and the Mule and JMS components they host.

From Intranet to InternetA

Mule component subscribes to the

letter box queue in the intranet zones and listens to messages published there. When it gets a new message, it sends it by HTTP POST to the Mule servlet on the Internet zone. This servlet is the endpoint of a Mule component that performs the routing based on the aforementioned JMS Property and publishes the message to the targeted queue (or stores it in a DLQ - aka

Dead Letter Channel - in case the target is unknown).

From Internet to IntranetThe other direction implies bringing messages back in the intranet zone because no sending can be initiated from the Internet zone. This is achieved in this bridge by using a Mule component in the intranet zone that regularly polls another Mule component in the Internet zone. The latter uses the power of

scripting in Mule to define a component that consumes messages from the Internet

letter box only when requested by a call from the intranet zone.

Your Turn NowAs you can see, this example does not cover temporary destinations (used by

requesters for example), nor the

reply-to feature of JMS. Note that, with a little of extra work, it would be fairly easy to support the case of

reply-to targeting non-temporary destinations. This would be done by rewriting the destination JMS property in the messages entering the bridge to have the reply channel go through a pre-configured route.

Similarly, this scenario lacks any kind of retry mechanism needed if a failure occurs in an HTTP transfer or a possible message staging where payload could be scanned for viruses before being routed to the intranet destination.

In fact, this example gives you a fairly complete view of what can be achieved with Mule, a little bit of configuration and not a single line of compiled code.

The fact that no coding is involved is pretty important for production matters: any skilled system administrator can now activate new routes or deactivate existing ones by simply tweaking the Mule configuration. This can be done without involving a software developer. In that sense, this JMS Bridge becomes a first class citizen of the IT infrastructure.

Do not wait any longer and

fetch the beast of burden that will massage your messages! But

leave the cow alone...